ChatGPT’s Impact On Thinking And Writing

Within weeks of ChatGPT being released in November 2022, educators were reporting that their students had submitted assignments written with the help of the AI chatbot.

Of course, the students had. Why would they not? With packed schedules and numerous assignments, it was almost logical for them to turn to AI.

ChatGPT is an online AI chatbot created by OpenAI. As a software that simulates human conversation, it can perform a variety of human-like tasks to help you at work, from answering questions to generating write-ups.

Indeed, ChatGPT is just one of the latest in a long line of generative AI. Others include the document analyser chatbot ChatPDF, image generators DALL-E and MidJourney, and the writing assistant Grammarly – but ChatGPT has somehow captured the world’s imagination.

What ChatGPT Really Is

As its full name “Chat Generative Pre-trained Transformer” suggests, ChatGPT is structured on “transformer” architecture that allows it to craft sentences based on predicting words in a given sequence. It is a large language model “pre-trained” on inputs of huge amounts of text data.

TechRadar describes it as “the world’s most voracious reader”, which has the ability to engage in sophisticated conversations with its users, beyond what conventional chatbots can do.

ChatGPT generates text that mirrors our expressions, giving responses that are surprisingly human-like. It can also communicate smoothly in several languages – an amazing feat, considering how difficult translations can be.

The Potential and Limits of AI

Instead of asking what ChatGPT can do, the question seems to be: what it cannot do now, or what it can do eventually.

Paying users are already enjoying the most advanced version of OpenAI’s language model, called GPT-4. This version is more powerful than the free ChatGPT (based on GPT-3.5), which could already generate large amounts of text for various purposes such as emails, business proposals or methodology sections for research papers.

Users have been empowered by GPT-4 to extents that were previously unimaginable. Reports claim that the latest version can pass legal exams with ease, provide the tools to code entire websites, and even generate prompts for images to aid designers in their creative pursuits.

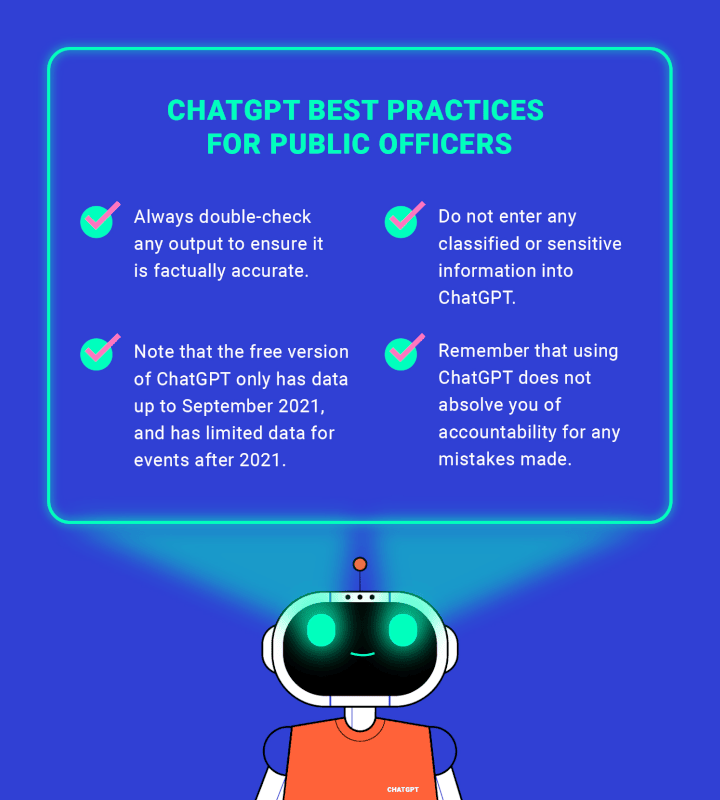

Yet, AI does have its limitations. In current versions, AI writing tools cannot accurately reference sources (from films to written texts) and are prone to “hallucinations”. This term is used to describe how AI can confidently assert falsehoods. AI is essentially only as reliable as the Internet or its data sources, which may not be entirely reliable.

Consider this: Would you want to read this article if it was written by AI? An AI-generated piece can only be a pastiche of online articles already published. You are reading this article in hopes of gleaning insight from people who have lived experiences in the real world. AI cannot give this.

Divided Opinions Over AI

Experts who have weighed in on the question of AI-generated writing have all had divided opinions.

ChatGPT has been accused of being “obsessed with cliches”, yet using it will help “raise expectations – and level the playing field”. The linguist Noam Chomsky decried it as manifesting “something like the banality of evil: plagiarism and apathy and obviation”.

Meanwhile, the prevailing Singapore inclination seems to be that we should learn how to use ChatGPT well, and ethically.

The Singapore Public Service plans to incorporate Pair (a version of ChatGPT developed by Open Government Products) into its work. As generative AI tools become acceptable in the workplace, how can we better understand the impact AI systems might have on the way we work?

Writing as a Tool for Thinking

In the Language and Communication Centre of NTU, we teach students how to analyse and synthesise the ideas of others, so that they can develop their own perspectives and arguments. Our goal is to give students the skills to contribute clearly and confidently to public discourse.

These communication skills, however, are like a muscle: they require exercise. Without repeated practice and use, those communication muscles get flabby, and students find reading and writing even more difficult. If students resort to AI systems to communicate their ideas, these fundamental communication skills could become impoverished.

In education studies, researchers agree that writing can be a tool for thinking. The act of writing forces us to put our inchoate thoughts into words and sentences, and this struggle to make meaning often leads to new ideas.

As Rutgers University English professors and authors of Habits of the Creative Mind Richard E. Miller and Ann Jurecic put it: “Writers discover what they think not before they write but in the act of writing.”

Speedbumps While Staying Open To Change

If writing is a tool for thinking, and we allow AI to do our writing for us, what might happen to our ability to think?

In an open letter that asks AI creators to consider AI’s long-term effects on civilisation, the writers note the risk of AI inundating public discourse with disinformation and making certain jobs obsolete. The environmental cost of running AI technology and its potential impact on warfare are also significant factors that should make us reflect on our embrace of AI.

Yet, as with most technological developments, society (workers such as public officers as well as students) will eventually become adept at utilising AI writing tools. Indeed, we must be open to such technologies or risk being left behind.

Professor Steven Mintz, from the University of Texas at Austin, described ChatGPT as merely another in a series of technologies that have altered education: “If a program can do a job as well as a person, then humans shouldn’t duplicate those abilities; they must surpass them.”

He believes that humans could thrive alongside AI by:

- Specialising in knowing the best prompts and questions to ask

- Going beyond crowdsourced knowledge to learn insights or skills beyond that of AI’s capabilities, such as fact-checking

- Turning insights into action and real-world solutions.

While we want to be careful not to let AI do all our writing (and thinking) for us, we can engage with this new tool as an opportunity to grow, and make our minds bend in new ways.

As anyone who has playfully put prompts into ChatGPT will know, this tool can be surprising and fun; the very act of engaging with it can perhaps make us think in new ways. But for articles such as this one, we should not be in a rush to let AI replace the human writer.

Contributors:

Dr Angela Frattarola is the Director of the Language and Communication Centre (LCC) at NTU. Her research interests include writing pedagogy, modernist literature and sound studies.

Eunice Tan is a lecturer in the LCC. Her studies and research focus on educational technology, English Language Teaching, and intercultural and interdisciplinary communication.

Ho Jia Xuan is a lecturer in the LCC. His research interests lie in the field of time and narrative, and more recently, in the exploration of technology-based pedagogies.

- POSTED ON

Sep 22, 2023

- TEXT BY

Dr Angela Frattarola

Eunice Tan

Ho Jia Xuan

- ILLUSTRATION BY

Ryan Ong